6 Pinecone Vector Database Features You Can Unleash with Airbyte

Summarize with Perplexity

With the increasing volumes of unstructured data like text, images, and audio, traditional databases struggle to capture semantic relationships and contextual meaning that modern AI applications demand. Data engineers face mounting pressure to implement vector databases that can handle high-dimensional embeddings while maintaining enterprise-grade performance and security standards. This challenge becomes even more complex when organizations need to integrate multiple data sources, automate embedding generation, and maintain real-time synchronization across distributed systems.

In this article, you will explore Pinecone DB, one of the most popular vector databases, along with a comprehensive overview of the platform's advanced features that you can leverage through Airbyte. This powerful data-integration platform transforms how organizations approach vector-database management and AI-ready data infrastructure.

What Is Pinecone Vector Database and How Does It Transform Data Management?

Pinecone is a fully managed, cloud-native vector-database platform designed specifically for high-dimensional vector-data operations. The platform excels in machine learning, natural-language processing, and AI applications while offering enterprise-grade flexibility and scalability to accommodate rapidly growing data requirements.

Unlike traditional databases that struggle with semantic relationships, Pinecone DB specializes in storing, indexing, and querying vector embeddings that capture the contextual meaning within unstructured data. This specialization enables organizations to unlock the full potential of their unstructured data assets.

Core Applications and Use Cases

The platform serves as the foundation for critical applications including recommendation systems, semantic-search implementations, and Retrieval-Augmented Generation workflows, dramatically improving both the speed and accuracy of retrieving contextually relevant information from vast knowledge bases.

This optimization proves essential for organizations building AI applications that require real-time access to domain-specific knowledge while maintaining response times measured in milliseconds. The platform's architecture ensures consistent performance even as data volumes scale exponentially.

Enterprise Security and Compliance Features

To protect sensitive data from unauthorized access and security breaches, Pinecone implements comprehensive security measures that meet enterprise requirements. The platform employs AES-256 encryption for data at rest, supports Customer-Managed Encryption Keys that give organizations complete control over their encryption infrastructure, and provides Single Sign-On integration along with granular role-based permissions for precise access control.

Additionally, Pinecone maintains compliance with HIPAA, GDPR, and other critical industry standards. This compliance ensures organizations can deploy vector databases in regulated environments without compromising security or compliance requirements.

Why Should You Choose Airbyte for Pinecone Vector Database Integration?

Airbyte represents a transformative approach to data integration, functioning as an AI-powered platform that provides comprehensive connectivity through over 600 pre-built connectors for diverse data sources and destinations. The platform enables organizations to construct sophisticated ELT pipelines that efficiently ingest data from multiple systems while automatically managing schema changes, data transformations, and synchronization complexities that traditionally require extensive manual intervention.

Advanced Transformation and Processing Capabilities

The platform's extensive capabilities extend far beyond simple data movement to include comprehensive transformation and processing features.

Organizations can leverage custom-connector development through the intuitive Connector Builder interface or utilize the powerful Connector Development Kit for more complex integration requirements.

Airbyte seamlessly integrates with popular large-language-model frameworks including LangChain and LlamaIndex, while also supporting dbt Cloud integration for sophisticated custom transformations. These transformations prepare data specifically for vector-database applications, ensuring optimal performance and accuracy.

PyAirbyte for Enhanced Developer Experience

PyAirbyte emerges as a particularly powerful feature that enables data scientists and engineers to run Airbyte connectors directly within Python environments. This capability allows teams to load results into SQL-compatible caches for immediate use with Pandas, AI frameworks, and machine-learning libraries, creating seamless workflows that bridge data integration and analytical processing.

The platform also provides comprehensive monitoring through detailed pipeline metrics and logs, with native-integration capabilities for enterprise monitoring solutions like Datadog and OpenTelemetry. This monitoring ensures complete visibility into data pipeline performance and health.

Enterprise-Grade Control and Security

For organizations requiring maximum control over sensitive data and deployment environments, Airbyte's self-managed enterprise edition delivers flexible, scalable data-ingestion capabilities while maintaining complete data sovereignty. This deployment option proves essential for regulated industries and organizations operating in environments where data cannot traverse public-cloud infrastructure or where compliance requirements mandate specific security and governance controls.

What Are the Latest Advancements in Pinecone's Vector Database Platform?

Pinecone DB has undergone significant evolution with the introduction of API version 2025-04, representing a fundamental shift toward more sophisticated vector-database capabilities that address enterprise-scale requirements. The platform's latest SDK releases across Python, Node.js, Java, and .NET demonstrate comprehensive improvements in performance, functionality, and developer experience that significantly enhance integration possibilities with platforms like Airbyte.

Performance Improvements and SDK Enhancements

The Python SDK v7.0.0 stands as the flagship advancement, achieving faster client-instantiation times through extensive refactoring and lazy-loading implementation. This performance improvement directly benefits Airbyte integrations by reducing pipeline-initialization overhead and enabling more efficient data-processing workflows.

The SDK now includes native support for asynchronous programming through PineconeAsyncio and IndexAsyncio classes, enabling seamless integration with modern async web frameworks and significantly improving parallel-operation efficiency. These improvements ensure optimal performance when processing large volumes of vector data.

Infrastructure Evolution and Deployment Flexibility

Pinecone's infrastructure evolution introduces revolutionary serverless architecture that eliminates capacity-planning requirements while automatically scaling resources based on demand patterns. This serverless approach decouples storage from compute resources, enabling unprecedented deployment flexibility that aligns perfectly with Airbyte's dynamic data-integration requirements.

The platform now supports deployment across AWS, Microsoft Azure, and Google Cloud Platform, providing organizations with multi-cloud flexibility that optimizes for latency, compliance, and cost considerations. This flexibility ensures organizations can deploy their Pinecone vector database infrastructure where it best serves their operational and regulatory requirements.

Enhanced Security and Data Sovereignty

The introduction of Bring-Your-Own-Cloud deployment addresses critical enterprise-security requirements by allowing organizations to deploy privately managed Pinecone regions within their own cloud accounts. This deployment model ensures complete data sovereignty while maintaining the seamless experience of a fully managed service.

This approach addresses stringent regulatory requirements that previously prevented vector-database adoption in highly regulated industries. Organizations can now leverage advanced vector database capabilities while maintaining complete control over their data location and processing.

Advanced Search and Retrieval Capabilities

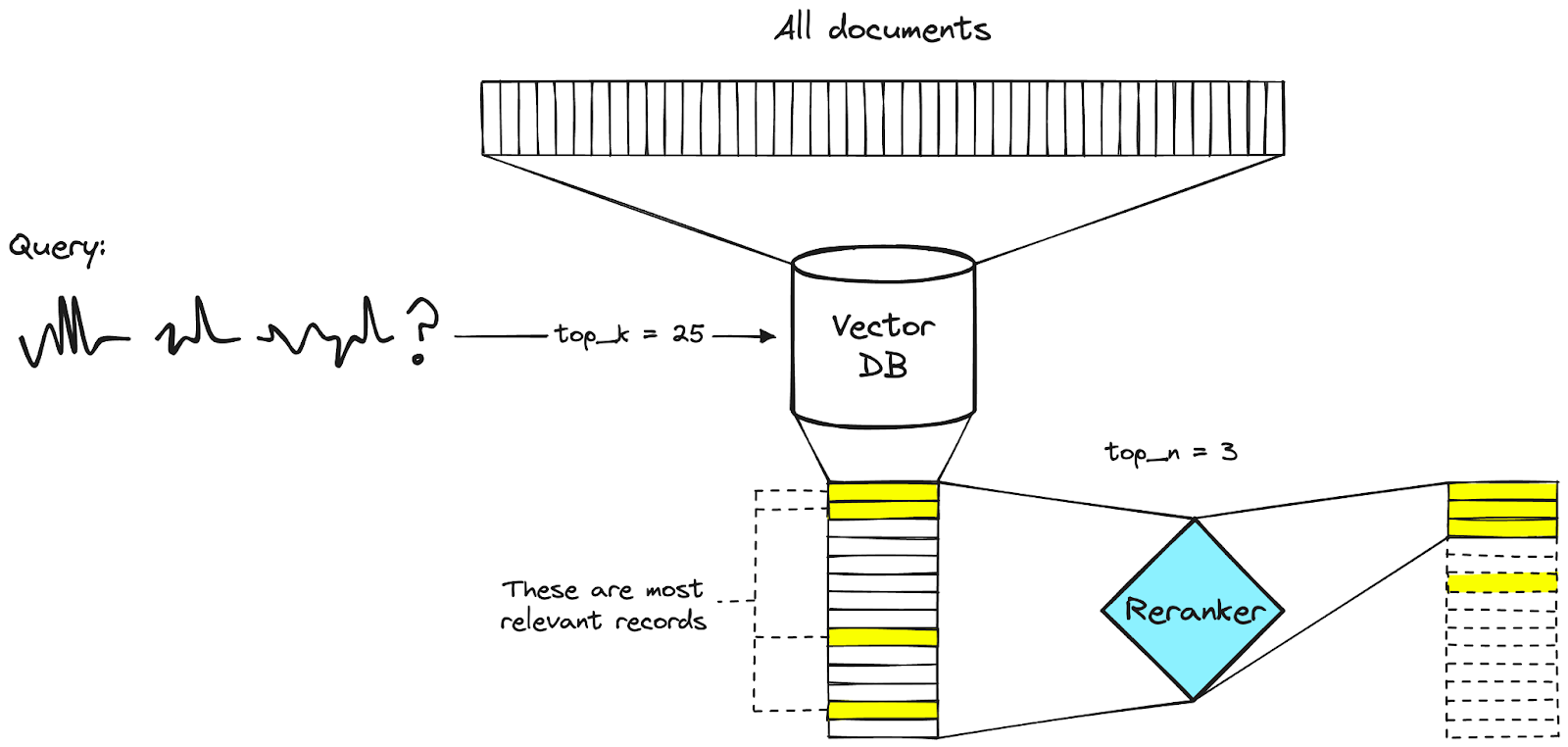

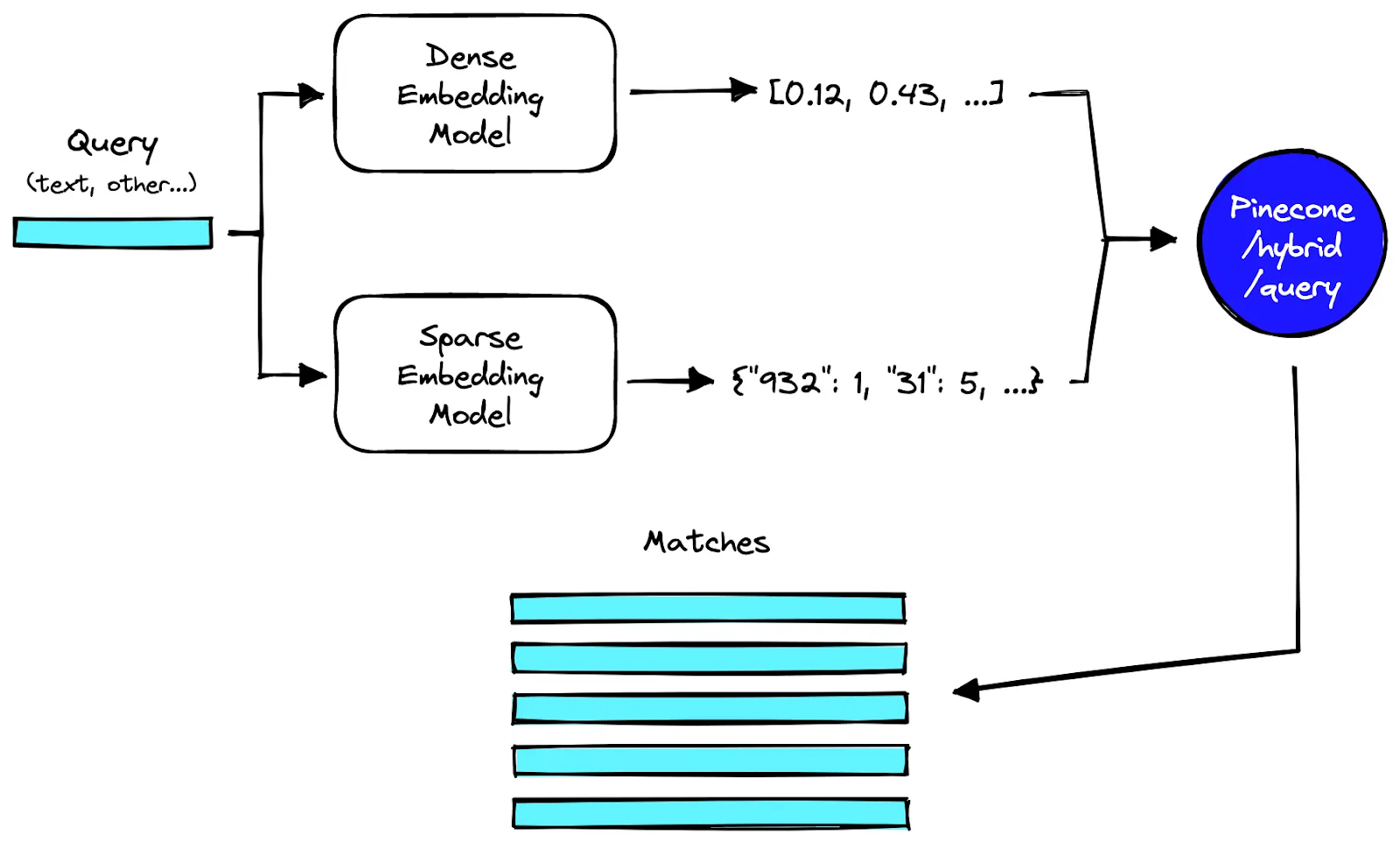

Advanced search capabilities have expanded dramatically with the introduction of sparse-only indexes that support both traditional methods like BM25 and advanced learned-sparse models. Hybrid-search functionality combines sparse and dense embeddings to deliver more robust and accurate search experiences.

Sophisticated re-ranking capabilities using models like pinecone-rerank-v0 enable cascading retrieval strategies that dramatically improve result quality. These enhancements ensure users receive the most relevant and contextually appropriate results for their queries.

How Can Organizations Implement Advanced Vector-Database Integration Strategies?

Modern vector-database implementations require sophisticated integration strategies that go beyond simple data movement to encompass comprehensive pipeline orchestration, quality management, and performance optimization. Organizations must develop multi-layered approaches that address embedding generation, incremental synchronization, metadata management, and real-time monitoring to ensure their vector-database implementations deliver consistent value while maintaining operational reliability.

Intelligent Data Preprocessing and Segmentation

The foundation of successful integration strategies involves implementing intelligent data preprocessing that segments content appropriately for embedding generation while preserving essential metadata relationships. Advanced implementations employ semantic-aware chunking algorithms that maintain contextual coherence rather than relying on simple fixed-size segmentation.

This approach ensures that retrieved passages provide sufficient context for accurate AI-application responses while optimizing storage efficiency and query performance. The preprocessing layer becomes critical for maintaining the quality and usefulness of vector embeddings across diverse data sources.

Quality Management and Monitoring Frameworks

Quality management becomes critical as organizations scale their vector-database implementations across multiple data sources and use cases. Successful strategies implement comprehensive validation frameworks that monitor embedding consistency, detect data drift, and automatically trigger remediation workflows when quality metrics decline below acceptable thresholds.

These frameworks must account for the unique characteristics of high-dimensional data while providing actionable insights that enable proactive optimization. Quality monitoring ensures that vector database performance remains consistent and reliable as data volumes and complexity increase.

Performance Optimization and Resource Management

Performance optimization requires understanding the computational characteristics of vector operations and implementing caching strategies, query-optimization techniques, and resource-allocation policies that balance accuracy with response-time requirements. Advanced implementations primarily use horizontal scaling, distributed computing, load balancing, and data partitioning to address capacity and performance challenges, rather than predictive analytics-driven automatic provisioning.

This proactive approach ensures consistent user experiences while controlling operational costs. Organizations must balance the computational demands of vector operations with budget constraints and performance expectations.

Security and Compliance Considerations

Integration strategies must also address security and compliance requirements through comprehensive access control, encryption, and audit capabilities specifically designed for vector data. Organizations need frameworks that maintain data lineage through embedding transformations, implement appropriate privacy protection for sensitive information encoded in vectors, and provide comprehensive monitoring that supports both operational optimization and regulatory-compliance reporting.

What Are the Essential Pinecone Features You Can Leverage Through Airbyte?

Integrating Pinecone DB with Airbyte unlocks a comprehensive suite of advanced vector-database capabilities that streamline data transformation, automate synchronization across multiple sources, and dramatically increase operational efficiency for AI-powered applications. This integration enables organizations to build sophisticated data pipelines that handle the complexity of vector-database management while maintaining the flexibility and control required for enterprise deployments.

How Do Namespaces Enhance Data Organization and Query Performance?

Namespaces provide sophisticated data-partitioning capabilities that enable faster query performance and robust multi-tenancy support within Pinecone DB indexes. These logical partitions create isolated data segments that significantly improve query efficiency by reducing the search space while maintaining security boundaries between different data sources or organizational units.

Airbyte's integration with Pinecone offers three comprehensive namespace-mapping strategies that ensure optimal data organization during large-scale synchronization operations. Destination Default automatically assigns records to pre-configured namespaces based on data-source characteristics. Custom Namespace Mapping offers granular control over data placement, enabling sophisticated naming conventions. Source Namespace Mapping preserves the organizational structure from source systems.

Advanced namespace implementations leverage metadata filtering to create dynamic partitioning strategies that automatically route data based on content characteristics, creation dates, or security classifications. This dynamic approach ensures optimal query performance while supporting complex access-control requirements.

What Makes Data Ingestion Efficient and Scalable in Pinecone?

Pinecone DB supports sophisticated data-ingestion strategies that accommodate different deployment architectures and performance requirements through optimized bulk-import capabilities and asynchronous processing mechanisms. Serverless indexes leverage bulk imports via Parquet files and async operations for efficient processing of large datasets.

Pod-based indexes utilize optimized batch upserts that can handle up to 1,000 records per batch with minimal latency impact. This flexibility ensures organizations can choose the ingestion strategy that best matches their performance requirements and infrastructure constraints.

Airbyte's Pinecone connector simplifies both ingestion methods by providing automated configuration management that requires only essential parameters like API keys and batch-size specifications. The platform handles complex orchestration, automatically optimizing batch sizes based on data characteristics and infrastructure capabilities while providing comprehensive monitoring and error handling.

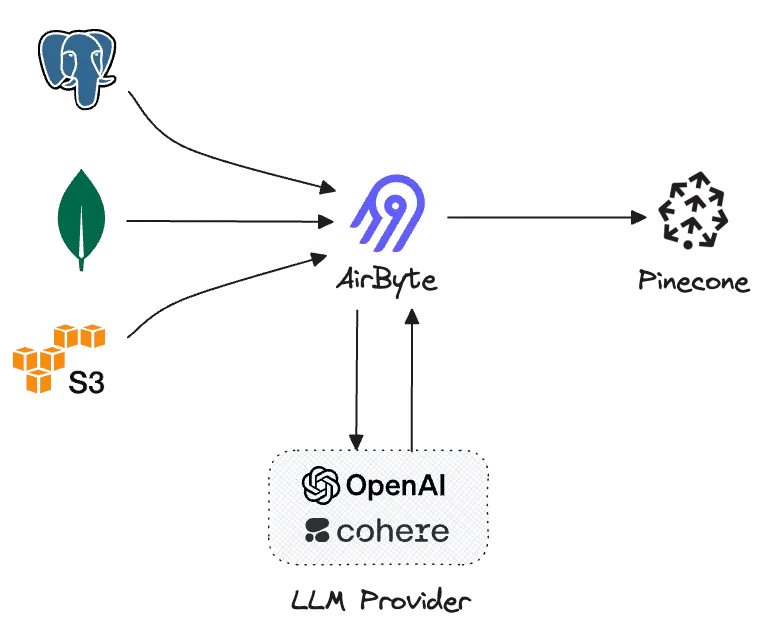

How Does Automated Embedding Generation Transform Data Processing?

Airbyte's integration with Pinecone includes sophisticated RAG-transformation capabilities that automate chunking, indexing, and embedding generation before loading data into vector databases. This automation eliminates the manual effort typically required to prepare data for vector database ingestion.

The platform exposes comprehensive embedding-model support including OpenAI's text-embedding-ada-002 and Cohere's embed-english-light-v2.0, and remains compatible with other major language-model providers through flexible API-integration mechanisms. This flexibility ensures organizations can select the embedding models that best match their accuracy and cost requirements.

What Role Does Reranking Play in Improving Search Accuracy?

Re-ranking represents a sophisticated two-step retrieval workflow that dramatically improves search precision. The process first retrieves a candidate set via vector similarity, then applies specialized scoring models to reorder results based on relevance and quality metrics.

This approach addresses the limitations of pure vector similarity search by incorporating additional context and relevance signals. Airbyte's incremental-synchronization capabilities keep reranking models operating on fresh, high-quality data, ensuring consistent accuracy improvements over time.

How Does Hybrid Search Combine the Best of Multiple Retrieval Methods?

Hybrid search combines sparse keyword-based retrieval with dense semantic embeddings to capture both exact term matching and conceptual similarity. This combination provides more comprehensive search results that address different types of user queries and information needs.

Sparse methods excel at finding exact matches and specific terminology, while dense embeddings capture semantic relationships and contextual meaning. Airbyte facilitates hybrid-search implementations by loading both semi-structured and unstructured data into Pinecone DB while maintaining zero-downtime synchronization across all data sources.

What Are the Benefits of Automated Batch Processing and Pipeline Orchestration?

Airbyte provides end-to-end pipeline-orchestration capabilities including scheduled source extractions, automated transformations, and optimized Pinecone-loading operations. This comprehensive orchestration eliminates manual intervention while ensuring the reliability and scalability needed for production AI applications.

The platform integrates with orchestration tools such as Apache Airflow, Prefect, and Kubernetes-based workflow systems and offers automated resource management for efficient data processing. This integration ensures that vector database pipelines operate efficiently within existing enterprise data infrastructure while maintaining optimal performance and cost efficiency.

Conclusion

Leveraging Pinecone DB through Airbyte transforms vector-data management from a complex technical challenge into a streamlined operational capability that powers sophisticated AI applications. Automated ingestion, intelligent embedding generation, and comprehensive pipeline orchestration create a robust foundation for retrieval-augmented generation systems, semantic-search applications, and AI-powered knowledge-management platforms. With real-time synchronization and advanced data syncing, organizations can efficiently manage and scale vector-based operations while upholding the performance, security, and reliability standards required for enterprise AI initiatives. This integration represents a significant advancement in making vector databases accessible and manageable for organizations of all sizes.

Frequently Asked Questions

What are the main advantages of using Airbyte with Pinecone DB over other integration approaches?

Airbyte automates embedding generation, sophisticated data preprocessing, and comprehensive monitoring specifically for vector-database applications, reducing implementation complexity and operational overhead compared with generic ETL tools.

How does the integration handle large-scale data volumes and performance optimization?

Intelligent batching, incremental synchronization, and automated resource scaling efficiently process large datasets without impacting query performance. Comprehensive monitoring enables proactive optimization and capacity planning.

What security and compliance features are available for enterprise deployments?

Both platforms provide end-to-end encryption, role-based access controls, and audit logging. Pinecone supports Customer-Managed Encryption Keys and offers enterprise-grade security features, while Airbyte offers self-managed deployment options for complete data sovereignty.

Can the integration support real-time data processing and streaming scenarios?

Yes. Configurable sync frequencies and incremental updates enable near real-time data processing, delivering streaming-like experiences with the reliability required for production AI applications.

How does the platform handle different embedding models and vector dimensions?

Airbyte automatically configures Pinecone indexes to match the dimensional requirements of selected embedding models and allows organizations to switch models easily based on performance, cost, and accuracy considerations.

.png)