Data Quality Monitoring: Key Metrics, Benefits & Techniques

Summarize with Perplexity

The quality of data significantly impacts decision-making in businesses, which affects operations. Poor-quality data can result in inaccurate insights, incorrect strategies, and considerable financial losses. For effective data-driven decisions, data quality monitoring is essential.

With proper monitoring, you can identify and address issues like duplicate data, missing values, or outdated information, helping ensure your data is accurate, consistent, complete, and reliable.

Let's dive into what data quality monitoring entails, why it's needed, and the metrics worth tracking.

What Is Data Quality Monitoring and Why Does It Matter?

Data quality monitoring is the ongoing assessment of an organization's data quality to confirm it meets required standards and is suitable for its intended use. It involves examining, measuring, and managing data for reliability, accuracy, and consistency. Continuous monitoring catches issues early before they affect operations or customers and ensures that decisions are based on high-quality data.

Evolution of Modern Data Quality Monitoring

Modern data quality monitoring has evolved beyond traditional batch-based validation to encompass real-time validation systems that can process streaming data with minimal latency while maintaining comprehensive quality assessment. These advanced systems integrate artificial intelligence and machine learning algorithms to establish baseline patterns and automatically detect deviations that may indicate quality issues.

Contemporary monitoring frameworks also incorporate comprehensive data lineage tracking, enabling teams to understand how quality issues propagate through complex data pipelines and impact downstream systems. This holistic approach transforms data quality monitoring from reactive problem-solving to proactive system design that prevents quality issues before they manifest in production environments.

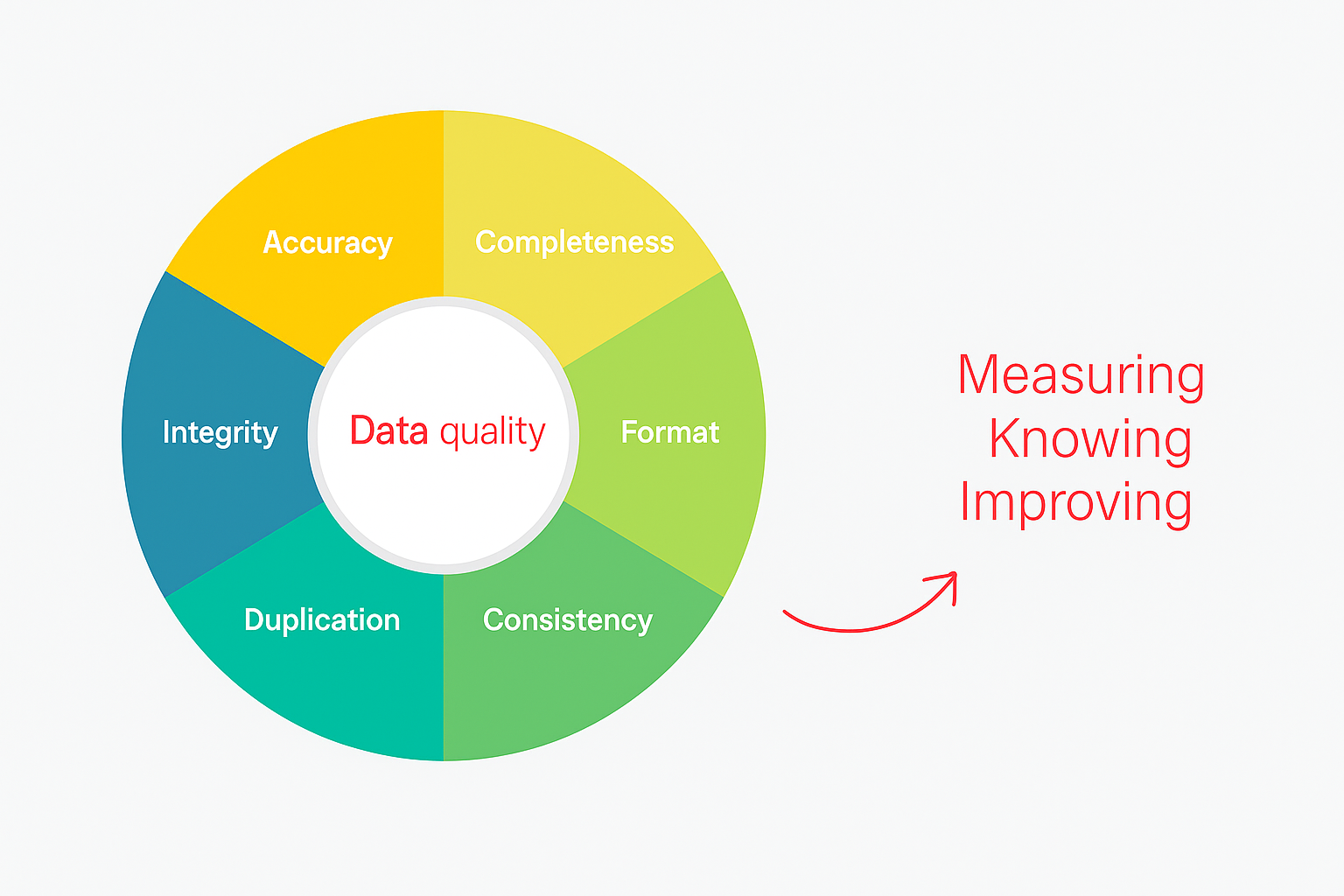

What Are the Key Dimensions of Data Quality?

Understanding the fundamental dimensions of data quality provides the foundation for effective monitoring strategies. These dimensions serve as the building blocks for comprehensive quality assessment frameworks that ensure data meets business requirements and supports reliable decision-making processes.

Core Quality Dimensions

- Accuracy: Represents how closely data values align with real-world values or authoritative sources. This dimension focuses on the correctness and truthfulness of data elements within your systems.

- Completeness: Measures whether all required data elements are present and populated. Missing data can significantly impact analytical outcomes and business processes.

- Consistency: Ensures uniformity of data representation across systems, time periods, and organizational boundaries. Data should maintain the same format, meaning, and structure regardless of where it appears.

- Integrity: Focuses on the structural soundness of data relationships and the preservation of business rules throughout data processing operations. This includes referential integrity and constraint validation.

Advanced Quality Dimensions

- Validity: Confirms that data values conform to predefined formats, standards, and business rules. This dimension ensures data adheres to expected patterns and constraints.

- Timeliness: Ensures data is current, up-to-date, and available when needed. Stale or outdated data can lead to poor decision-making and operational inefficiencies.

- Uniqueness: Verifies that each real-world entity appears only once within datasets. Duplicate records can skew analysis results and create operational confusion.

Which Data Quality Metrics Should You Monitor?

Beyond the core dimensions, sophisticated quantitative metrics provide deeper insights into data quality patterns and help surface issues before they propagate through business processes.

Fundamental Quality Metrics

- Error Ratio: Measures the percentage of records containing errors relative to the total dataset size. This metric provides a high-level view of overall data health.

- Address Validity Percentage: Tracks the proportion of addresses that conform to postal standards and can be verified against authoritative sources. This metric is particularly important for customer data management.

- Duplicate Record Rate: Identifies the frequency of redundant entries within datasets. High duplication rates often indicate issues in data collection or integration processes.

Advanced Performance Metrics

- Data Time-to-Value: Measures the duration between the implementation or adoption of a data solution and the realization of meaningful business value or outcomes, with shorter cycles indicating more efficient processes.

- Data Transformation Error Rate: Tracks failures during data processing and transformation operations. This metric helps identify bottlenecks and quality issues in ETL processes.

- Schema Drift Detection Rate: Monitors the frequency at which data structures change unexpectedly. Uncontrolled schema changes can break downstream processes and applications.

Operational Quality Metrics

- Data Lineage Completeness: Assesses how thoroughly you can trace data from source to destination. Complete lineage tracking enables better root cause analysis and impact assessment.

- Quality Rule Coverage: Measures the percentage of data elements subject to active quality validation rules. Higher coverage generally correlates with better overall data quality.

- Dark Data Volume: Quantifies the amount of data that remains unused or inaccessible for analysis. Reducing dark data increases the value derived from data investments.

Why Should You Monitor Data Quality?

Quality degradation can occur at multiple stages of the data lifecycle, making comprehensive monitoring essential for maintaining reliable data-driven operations.

Data Ingestion Challenges

Data ingestion pulls data from varied sources including databases, CRMs, IoT devices, and external APIs into centralized systems. This process introduces numerous opportunities for quality degradation, including duplication, missing or stale records, incorrect formats, and undetected outliers.

Source system inconsistencies create immediate challenges when integrating data from multiple platforms. Different naming conventions, data types, and validation rules across source systems can introduce errors during the consolidation process.

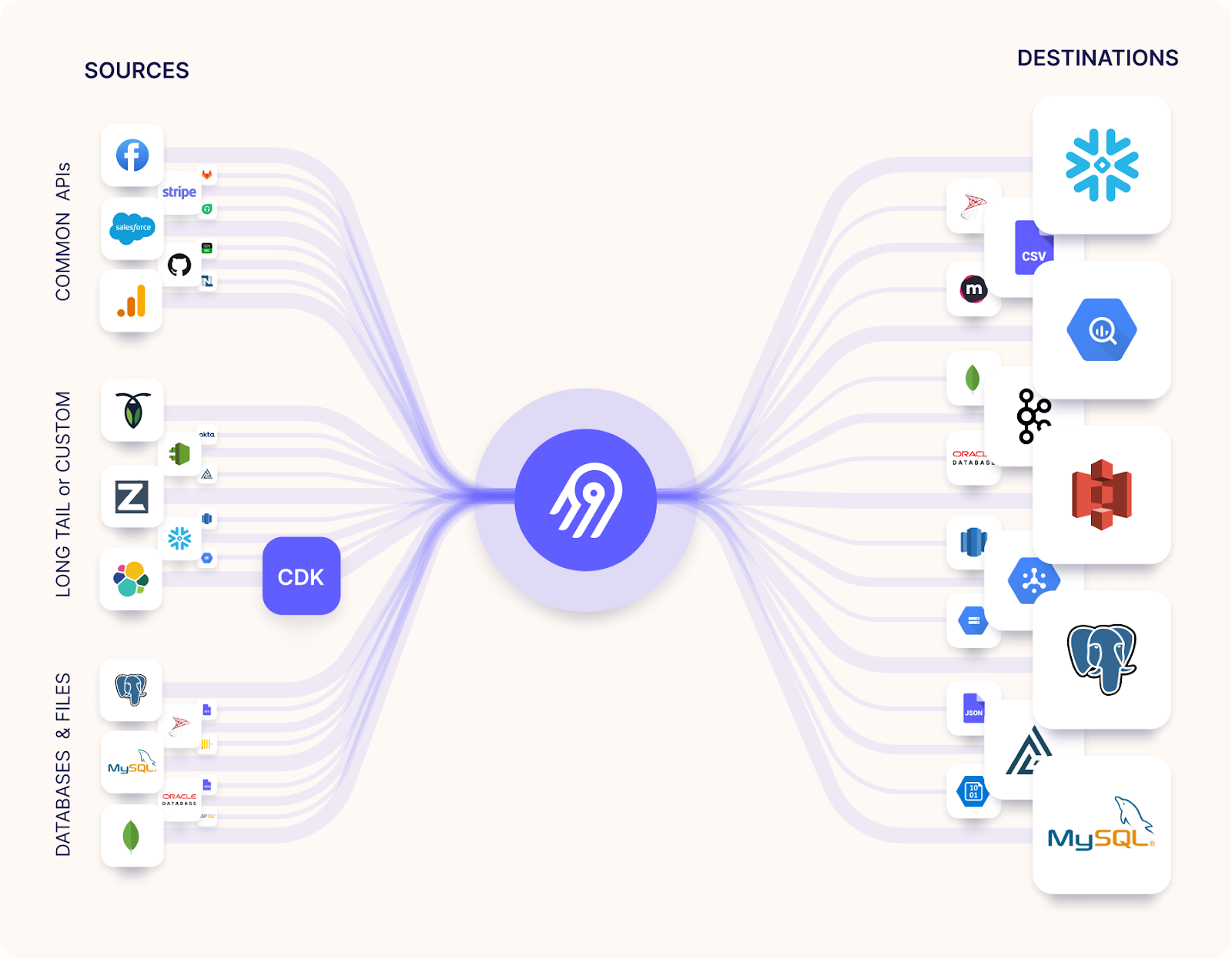

Streamline Your Data Ingestion with Airbyte

Airbyte addresses critical ingestion challenges through its comprehensive data integration platform that combines extensive connectivity with robust quality assurance mechanisms. With over 600+ connectors, Airbyte provides standardized data ingestion that reduces quality issues at the source while maintaining data integrity throughout the pipeline process.

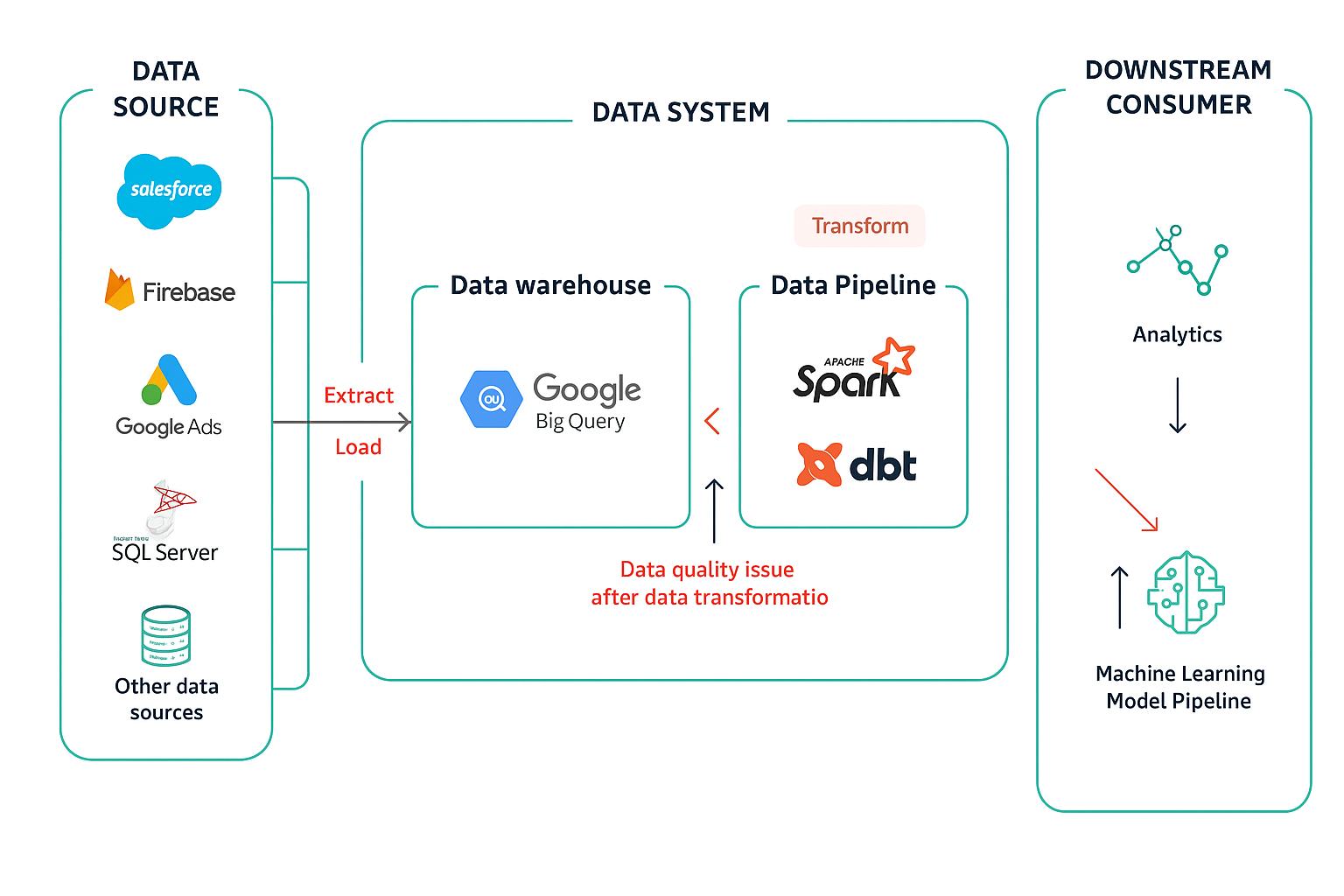

Data Processing and Pipeline Issues

Data processing pipelines represent critical points where quality issues can be introduced through faulty transformation logic, unhandled schema changes, or pipeline configuration errors. Complex transformations can inadvertently corrupt data or introduce subtle errors that only surface during downstream analysis.

Pipeline failures often cascade through interconnected systems, amplifying the impact of initial quality issues. A single failed validation or transformation can affect multiple downstream processes and applications.

Downstream System Impact

Quality issues that escape detection during ingestion and processing often manifest in downstream systems including business intelligence tools, machine learning pipelines, and operational applications. Poor data quality in analytical systems leads to unreliable reports and misguided strategic decisions.

Machine learning models trained on poor-quality data exhibit reduced accuracy and may perpetuate biases present in the training data. Operational applications consuming poor-quality data can malfunction or provide incorrect information to users and customers.

How Do AI and Machine Learning Transform Data Quality Monitoring?

AI and machine learning technologies transform data quality monitoring from reactive, rule-based approaches to proactive, intelligent systems that can learn, adapt, and predict issues before they impact business operations.

Intelligent Anomaly Detection and Pattern Recognition

Machine learning algorithms excel at identifying subtle patterns in data that traditional rule-based systems might miss. These systems establish baseline behaviors for datasets and automatically flag deviations that could indicate quality issues.

Unsupervised learning techniques can detect anomalies without requiring predefined rules or thresholds. This capability is particularly valuable for identifying previously unknown quality issues or detecting gradual degradation trends.

Automated Rule Generation and Intelligent Validation

AI systems can analyze historical data patterns to automatically generate quality validation rules. This automation reduces the manual effort required to establish comprehensive quality frameworks while ensuring rules remain relevant as data patterns evolve.

Natural language processing capabilities enable these systems to understand business context and generate more sophisticated validation logic that considers semantic meaning rather than just syntactic correctness.

Predictive Quality Management and Root Cause Analysis

Advanced analytics can predict when quality issues are likely to occur based on historical patterns and current system conditions. This predictive capability enables proactive intervention before quality degradation affects business operations.

Machine learning models can also accelerate root cause analysis by automatically correlating quality issues with potential contributing factors across complex data ecosystems. This capability significantly reduces the time required to identify and resolve quality problems.

Machine Learning Model Integration and Feedback Loops

Modern data quality monitoring systems integrate directly with machine learning pipelines to provide continuous feedback on data quality trends. This integration enables automated model retraining when quality thresholds are exceeded or data drift is detected.

Feedback loops between quality monitoring systems and data processing pipelines enable automatic adjustments to processing logic based on observed quality patterns. This self-improving capability reduces manual intervention requirements while maintaining high quality standards.

What Is Data Observability and How Does It Enhance Quality Monitoring?

Data observability provides end-to-end visibility into data health, lineage, and operational characteristics across entire data ecosystems. This comprehensive approach extends beyond traditional quality monitoring to include operational metrics and system performance indicators.

The Five Pillars of Data Observability

- Freshness Monitoring: Tracks how recently data was updated or created. This pillar ensures data remains current and relevant for business decision-making processes.

- Quality Assessment: Encompasses traditional data quality dimensions including accuracy, completeness, and consistency. This pillar provides comprehensive quality metrics across all data assets.

- Volume Monitoring: Tracks data quantity changes over time. Unexpected volume changes often indicate upstream issues or processing problems that require investigation.

- Schema Monitoring: Detects structural changes in data formats, tables, or APIs. Schema evolution can break downstream processes if not properly managed and communicated.

- Lineage Tracking: Maps data flow through systems from source to destination. Complete lineage visibility enables impact analysis and facilitates troubleshooting when issues occur.

Proactive Issue Detection and Prevention

Data observability platforms combine multiple monitoring signals to provide early warning systems that detect issues before they impact business operations. These systems correlate quality metrics with operational indicators to identify root causes more effectively.

Automated alerting capabilities ensure relevant stakeholders receive timely notifications when quality thresholds are breached or unusual patterns are detected. This proactive approach minimizes the business impact of data quality issues.

Integration with Modern Data Architectures

Modern observability platforms integrate seamlessly with cloud-native data architectures, providing monitoring capabilities across data lakes, warehouses, and streaming platforms. This extensive coverage greatly reduces the likelihood of data assets remaining invisible to quality monitoring systems, although complete visibility is not always guaranteed.

API-first architectures enable observability platforms to integrate with existing data tools and workflows. This integration approach ensures monitoring capabilities enhance rather than disrupt existing operational processes.

Comprehensive Monitoring and Analytics Capabilities

Advanced observability platforms provide sophisticated analytics capabilities that help identify trends, patterns, and relationships within quality metrics. These insights enable data teams to optimize quality management strategies over time.

Customizable dashboards and reporting capabilities ensure different stakeholders receive relevant quality information tailored to their specific needs and responsibilities. This targeted approach improves engagement with quality management initiatives across organizations.

What Are the Most Effective Data Quality Monitoring Techniques?

Traditional Validation and Assessment Techniques

Data Auditing involves systematic examination of data assets to assess quality levels and identify improvement opportunities. Regular audits provide baseline measurements and track quality trends over time.

Data Profiling analyzes dataset characteristics, including distributions, patterns, and relationships. Profiling results inform quality rule development and help establish realistic quality expectations.

Data Cleaning encompasses techniques for correcting identified quality issues. Effective cleaning processes balance automation with human oversight to ensure corrections improve rather than compromise data integrity.

Advanced Automated Monitoring Approaches

Real-Time Data Monitoring provides continuous quality assessment as data flows through systems. This approach enables immediate detection and correction of quality issues before they propagate to downstream systems.

Automated Data Quality Rule Enforcement applies predefined quality standards automatically during data processing. This technique ensures consistent quality application across all data processing operations.

Performance and Scalability Testing

Load testing ensures quality monitoring systems maintain performance under peak data volumes. Regular performance testing identifies capacity constraints before they impact monitoring effectiveness.

Scalability assessment evaluates how monitoring systems adapt to growing data volumes and increasing complexity. This evaluation ensures monitoring capabilities remain effective as data ecosystems evolve.

Comprehensive Metrics and Trend Analysis

Long-term trend analysis identifies gradual quality degradation that might not trigger immediate alerts. This analysis enables proactive quality management and helps prioritize improvement initiatives.

Comparative analysis across different data sources, time periods, or business units provides insights into quality patterns and helps identify best practices that can be applied more broadly.

How Do Privacy and Compliance Requirements Shape Data Quality Monitoring?

Modern compliance requirements demand sophisticated approaches that balance comprehensive quality monitoring with data protection obligations.

Privacy-First Quality Monitoring Frameworks

Data minimization principles require monitoring systems to assess quality using the minimum data necessary to achieve monitoring objectives. This approach reduces privacy risks while maintaining monitoring effectiveness.

Pseudonymization and anonymization techniques enable quality assessment without exposing sensitive personal information. These privacy-preserving approaches ensure compliance with regulations while supporting comprehensive monitoring.

Regulatory Compliance Integration

GDPR compliance requires organizations to demonstrate data accuracy and enable data subject rights. Quality monitoring systems must support these requirements through comprehensive audit trails and correction mechanisms.

Industry-specific regulations impose additional quality requirements that monitoring systems must address. Healthcare, financial services, and other regulated industries require specialized monitoring approaches that address sector-specific compliance obligations.

Data Governance and Accountability Frameworks

Data stewardship programs establish clear accountability for data quality across organizations. Monitoring systems support these programs by providing visibility into quality metrics and enabling performance tracking for data stewards.

Policy enforcement mechanisms ensure quality standards align with organizational governance requirements. Automated policy enforcement reduces the risk of compliance violations while supporting consistent quality management.

Audit Trail and Transparency Requirements

Comprehensive logging ensures all quality monitoring activities are documented for audit purposes. These audit trails support compliance demonstrations and enable forensic analysis when quality issues occur.

Transparency reporting provides stakeholders with visibility into quality metrics and improvement initiatives. Regular reporting demonstrates organizational commitment to data quality and supports accountability frameworks.

Conclusion

Data quality monitoring has evolved from simple validation into a sophisticated discipline that combines AI capabilities, observability frameworks, and privacy-preserving approaches. Modern organizations need monitoring strategies that address complex data environments while meeting regulatory requirements.

The future of data quality monitoring lies in intelligent systems that learn and adapt while providing necessary transparency and control. This investment represents a strategic capability that enables organizations to maximize value from data assets while maintaining trust in an increasingly data-driven world.

Frequently Asked Questions

What is the difference between data quality monitoring and data validation?

Data quality monitoring is an ongoing, comprehensive process that continuously assesses multiple dimensions of data health across entire systems. Data validation typically focuses on specific rules or checks applied at particular points in data processing. While validation is a component of monitoring, comprehensive monitoring encompasses broader observability, trend analysis, and proactive issue detection across the complete data lifecycle.

How often should data quality monitoring be performed?

The frequency depends on your data usage patterns and business requirements. Critical operational data should be monitored in real-time or near real-time, while analytical datasets might be assessed daily or weekly. High-velocity data streams require continuous monitoring, whereas static reference data might only need periodic assessment. The key is aligning monitoring frequency with business impact and data change rates.

What are the most common causes of data quality issues?

Common causes include system integration problems during data ingestion, transformation errors in ETL processes, source system changes that aren't communicated downstream, manual data entry mistakes, and inadequate validation rules. Schema changes, network issues, and poorly designed data pipelines also contribute significantly to quality problems. Understanding these patterns helps prioritize monitoring efforts and prevention strategies.

How do you measure the ROI of data quality monitoring investments?

ROI measurement focuses on prevented costs from avoided errors, reduced manual correction efforts, improved decision-making outcomes, and decreased system downtime. Quantifiable benefits include reduced customer service issues, prevented compliance violations, improved operational efficiency, and faster time-to-insight for business decisions. Track metrics like error detection rates, resolution times, and business impact of prevented issues.

Can data quality monitoring work with legacy systems?

Yes, though implementation approaches vary based on system capabilities. Legacy systems may require external monitoring tools that connect through available interfaces like database connections, file exports, or API endpoints. Modern monitoring platforms often include connectors for common legacy systems. The key is establishing monitoring touchpoints that provide visibility into data quality without disrupting existing operations.

.png)