Data Preprocessing: What it is, Steps, & Methods Involved

When flawed data silently corrupts your AI models and strategic decisions, you're not just fighting inaccuracies—you're battling organizational distrust. With many organizations admitting they don't fully trust their data for decision-making and data quality remaining the primary challenge for many enterprises, the consequences extend far beyond technical inconvenience. Poor preprocessing doesn't just delay projects—it undermines the foundation of data-driven innovation that modern businesses depend on for competitive advantage.

This guide walks through the complete process of data preprocessing, covering both fundamental techniques and cutting-edge methodologies that address today's complex data challenges. From handling traditional quality issues to implementing advanced frameworks for real-time adaptation and governance, you'll discover how to transform raw, messy data into reliable assets that power accurate analytics and robust machine-learning models.

What Is Data Preprocessing?

Data preprocessing represents the foundational phase of any data analysis or machine-learning pipeline, where raw data undergoes systematic transformation to become suitable for modeling and analysis. This critical process involves cleaning, structuring and optimizing data to ensure downstream algorithms can extract meaningful patterns and generate reliable insights.

Modern data preprocessing extends beyond simple cleaning operations to encompass sophisticated techniques like synthetic-data generation, automated feature engineering and real-time quality monitoring. These advanced approaches address contemporary challenges such as privacy compliance, scalability demands and the need for continuous model adaptation in dynamic environments.

The preprocessing phase directly impacts model performance, with studies showing that well-preprocessed data can improve machine-learning accuracy, sometimes substantially, compared to models trained on raw data. However, the degree of improvement varies by context and dataset. This makes preprocessing not just a preparatory step, but a strategic component that determines the success of entire data-science initiatives.

Why Is Data Preprocessing Critical for Modern Analytics?

The importance of data preprocessing has intensified with the rise of automated decision-making and AI-augmented business processes. Organizations now face unprecedented data complexity, with information flowing from diverse sources—including IoT sensors, social-media platforms, cloud applications and legacy systems—each with distinct formatting standards and quality characteristics.

Poor data quality creates cascading effects throughout analytical workflows. When preprocessing steps are inadequate, models develop biases toward over-represented data segments, miss critical patterns in under-sampled populations and fail to generalize to real-world scenarios. This is particularly problematic in regulated industries such as healthcare and finance, where biased models can lead to discriminatory outcomes and compliance violations.

Key benefits of comprehensive data preprocessing include:

- Noise Reduction – eliminates errors and inconsistencies in datasets, reducing noise that interferes with pattern recognition and helping algorithms identify genuine relationships more accurately

- Enhanced Model Compatibility – transforms heterogeneous data types and formats into standardized representations that machine-learning algorithms can process effectively

- Improved Computational Efficiency – optimizes data structures and reduces dimensionality to accelerate training times while maintaining model performance

- Regulatory Compliance – implements privacy-preserving transformations and audit trails that satisfy data-governance requirements across jurisdictions

Advanced preprocessing also enables organizations to leverage synthetic-data generation for augmenting limited datasets, implement automated quality checks that prevent corrupted data from reaching production models and establish lineage tracking that supports reproducibility and debugging throughout the ML lifecycle.

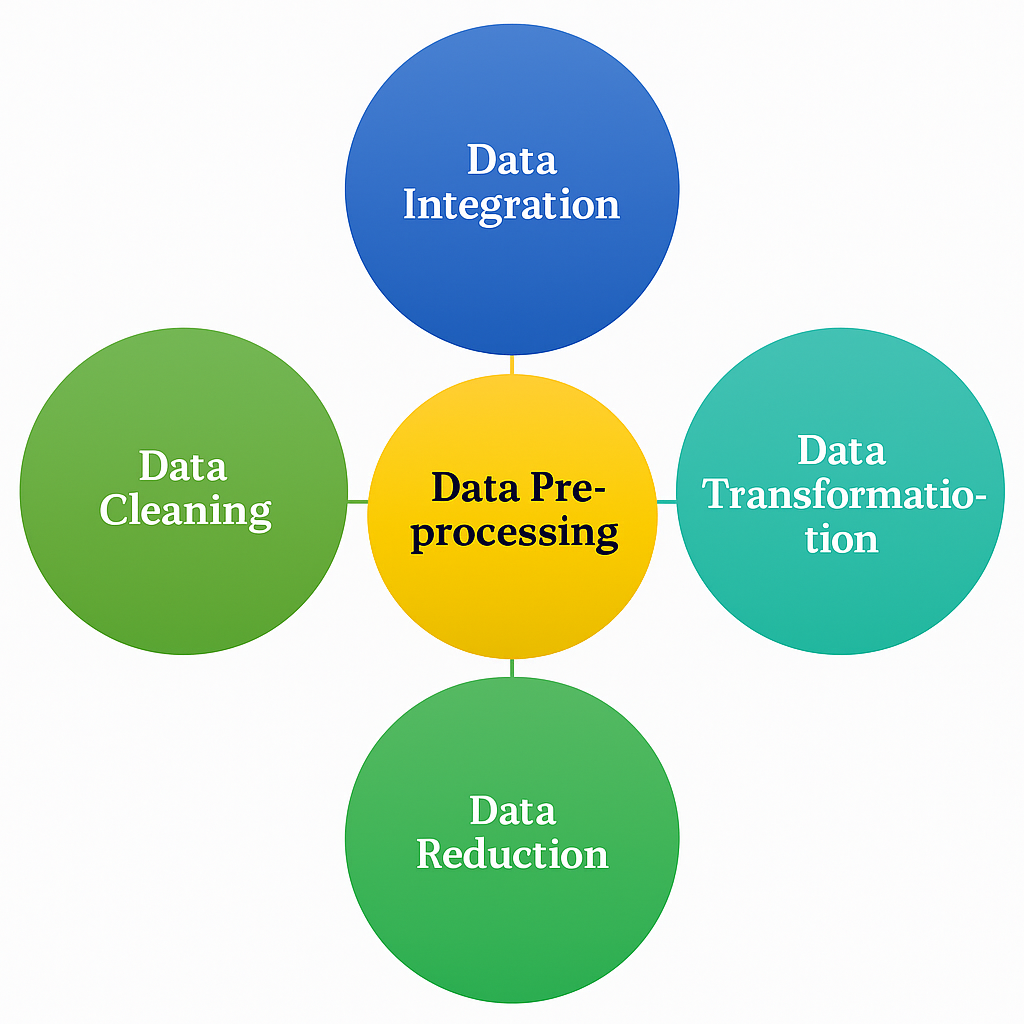

What Are the Essential Steps in Data Preprocessing?

Modern data preprocessing encompasses both traditional foundational steps and advanced techniques that leverage automation and machine-learning optimization. These methods collectively address data quality, integration complexity and scalability requirements in contemporary analytical environments.

1. Data Integration

Data integration has evolved from simple concatenation to sophisticated fusion techniques that preserve semantic relationships across heterogeneous sources. Contemporary integration approaches must handle multi-modal data types, resolve schema conflicts and maintain data lineage throughout the combination process.

Modern integration workflows typically involve cloud-native platforms that support real-time streaming alongside batch processing, enabling organizations to unify data from operational systems, external APIs and historical archives. This hybrid approach ensures comprehensive coverage while minimizing latency for time-sensitive analytics.

- Record Linkage – employs advanced matching algorithms that combine string similarity, probabilistic modeling and machine-learning classifiers to identify records referring to the same entity across datasets with high accuracy

- Data Fusion – integrates information from multiple sources using conflict-resolution strategies, quality-based weighting and semantic reconciliation to create comprehensive, authoritative datasets

- Schema Harmonization – automatically aligns disparate data structures through semantic mapping and transformation rules that preserve meaning while enabling unified processing

See also : What is Data Matching?

2. Data Transformation

Data transformation has evolved to incorporate intelligent automation and context-aware processing that adapts to dataset characteristics and downstream model requirements. Modern transformation workflows leverage machine learning to optimize preprocessing decisions and ensure transformations enhance rather than degrade predictive signal.

Contemporary cleaning approaches utilize deep-learning models for sophisticated imputation, ensemble methods for outlier detection and automated quality assessment that continuously monitors transformation effectiveness. These techniques significantly outperform traditional rule-based methods, particularly for complex datasets with subtle quality issues.

Advanced cleaning encompasses several key areas:

- Intelligent Outlier Detection – combines statistical approaches such as isolation forests with domain-specific rules and machine-learning anomaly detectors to distinguish genuine outliers from data-entry errors

- Context-Aware Missing-Value Imputation – employs transformer-based models and multivariate techniques like MICE that leverage feature relationships rather than simple statistical substitution

- Automated Duplicate Removal – uses fuzzy-matching algorithms and record clustering to identify near-duplicates that simple hash-based approaches miss

Modern transformation techniques extend these foundations:

- Adaptive Feature Scaling – applies different normalization strategies to feature subsets based on their distribution characteristics, preserving important signal while ensuring algorithm compatibility

- Semantic Categorical Encoding – leverages target relationships and hierarchical structures in categorical variables to create encodings that capture meaningful patterns rather than arbitrary assignments

- Distribution-Aware Discretization – automatically determines optimal binning strategies for continuous variables using entropy minimization and information-gain measures

Further reading : Data Transformation Tools

3. Data Reduction

Data reduction strategies now incorporate sophisticated machine-learning techniques that preserve predictive information while dramatically reducing computational requirements. Modern reduction approaches optimize for both storage efficiency and model performance, ensuring that dimension reduction enhances rather than degrades analytical outcomes.

Contemporary reduction methods integrate automated feature engineering with traditional dimensionality reduction, creating hybrid approaches that generate new predictive features while eliminating redundant information. This dual strategy maximizes information density in the final dataset.

- Intelligent Dimensionality Reduction – combines Principal Component Analysis with autoencoder neural networks and manifold-learning techniques to capture non-linear relationships while reducing feature space

- Automated Feature Selection – employs ensemble methods, recursive feature elimination and mutual-information measures to identify optimal feature subsets based on predictive contribution rather than simple correlation measures

- Adaptive Sampling – uses stratified and importance-based sampling strategies that preserve dataset characteristics while reducing size—particularly valuable for large-scale datasets where full processing is computationally prohibitive

Advanced reduction frameworks now support iterative optimization where feature selection and model training occur jointly, ensuring that reduction decisions enhance final model performance rather than simply minimizing dataset size.

How Does Data Provenance and Lineage Tracking Enhance Preprocessing Quality?

Data provenance and lineage tracking have become essential components of modern preprocessing workflows, providing transparency and accountability that regulatory frameworks increasingly demand. These capabilities document the complete transformation history of datasets, enabling organizations to verify data authenticity, debug processing errors and maintain compliance with governance requirements.

Provenance systems capture metadata about data origins, transformation steps and quality checkpoints throughout the preprocessing pipeline. This documentation proves invaluable when models exhibit unexpected behavior or when auditors require evidence of data-handling procedures. Advanced provenance frameworks automatically generate audit trails without requiring manual documentation, reducing compliance overhead while improving process reliability.

Modern lineage tracking extends beyond simple workflow documentation to include impact-analysis capabilities. When source data changes or preprocessing logic requires updates, lineage systems can automatically identify all downstream artifacts that require regeneration. This dependency mapping prevents inconsistencies and ensures that model-training datasets remain synchronized with their source systems.

Implementation approaches leverage graph databases and metadata-management platforms that capture relationships between datasets, transformation steps and output artifacts. These systems support both automated metadata extraction from processing frameworks and manual annotation for business context that automated systems cannot capture.

Key benefits include rapid root-cause analysis when data-quality issues emerge, automated compliance reporting that reduces regulatory burden and reproducibility guarantees that enable reliable model deployment across environments. Organizations implementing comprehensive lineage tracking report significantly faster debugging times and markedly improved stakeholder confidence in analytical outputs.

What Role Does Drift Monitoring Play in Real-Time Data Preprocessing?

Data-drift monitoring has emerged as a critical capability for maintaining preprocessing effectiveness in production environments where data characteristics continuously evolve. Unlike traditional batch-processing scenarios, real-time preprocessing must adapt to shifting distributions, emerging patterns and changing business contexts without human intervention.

Drift-detection systems continuously compare incoming data streams against baseline distributions established during initial preprocessing design. When statistical measures such as Kolmogorov-Smirnov tests or Jensen-Shannon divergence indicate significant distribution shifts, automated systems can trigger preprocessing-parameter updates, model retraining or quality-alert workflows to maintain system effectiveness.

Advanced monitoring frameworks distinguish between different types of drift to enable appropriate responses. Covariate drift affects feature distributions and may require scaling-parameter adjustments, while concept drift changes the relationship between features and target variables, potentially necessitating complete preprocessing-pipeline revision. Label drift indicates changes in target-variable distributions that may require sampling-strategy modifications.

Real-time adaptation capabilities enable preprocessing systems to adjust parameters dynamically based on observed drift patterns. For example, normalization parameters can be incrementally updated using streaming statistics, while imputation strategies can be modified when missing-value patterns shift. These adaptive approaches maintain preprocessing effectiveness without requiring complete pipeline regeneration.

Implementation typically involves deploying lightweight monitoring agents alongside production preprocessing systems, with configurable alerting thresholds that balance sensitivity against false-alarm rates. Cloud-native platforms now provide managed drift-detection services that integrate seamlessly with existing preprocessing workflows, reducing operational complexity while ensuring comprehensive coverage.

Organizations using automated drift monitoring report faster response times to data-quality degradation and significantly improved model reliability in dynamic business environments. The proactive detection and adaptation capabilities prevent performance degradation before it impacts business outcomes, transforming drift from a reactive problem into a managed operational consideration.

Conclusion

Data preprocessing serves as the foundation for reliable analytics and robust machine-learning systems, directly determining the quality and trustworthiness of downstream insights. By implementing comprehensive preprocessing workflows that combine traditional techniques with modern approaches—such as automated quality monitoring, synthetic-data generation and real-time adaptation capabilities—organizations can transform raw data into strategic assets that drive competitive advantage.

The evolution from basic cleaning operations to intelligent, automated preprocessing systems reflects the increasing complexity of modern data environments and the growing importance of data-driven decision-making. As organizations continue to expand their analytical capabilities and face mounting regulatory requirements, investing in sophisticated preprocessing infrastructure becomes essential for maintaining both operational efficiency and compliance standards.

Success in contemporary data preprocessing requires embracing both foundational principles and emerging technologies, ensuring that your data-transformation workflows can adapt to evolving business needs while maintaining the reliability and transparency that stakeholders demand.

Frequently Asked Questions

What are the most common data preprocessing challenges?

The most common data preprocessing challenges include handling missing values, detecting and correcting outliers, managing inconsistent data formats across multiple sources, dealing with duplicate records, and ensuring data quality at scale. Modern organizations also face challenges with real-time preprocessing requirements, maintaining data lineage, and implementing privacy-preserving transformations to meet regulatory compliance standards.

How long does data preprocessing typically take?

Data preprocessing typically consumes 60-80% of the total time spent on data science projects. The duration varies significantly based on data complexity, source diversity, and quality requirements. Simple datasets with minimal quality issues may require only hours or days of preprocessing, while complex enterprise datasets with multiple sources, quality issues, and strict governance requirements can take weeks or months to properly preprocess.

What tools are best for data preprocessing?

The best data preprocessing tools depend on your specific requirements and technical environment. Popular options include Python libraries like pandas, scikit-learn, and PyAirbyte for programmatic preprocessing, cloud-native platforms like Airbyte for automated data integration and transformation, and enterprise solutions that provide visual interfaces alongside advanced automation capabilities. Modern organizations often use hybrid approaches that combine multiple tools to address different preprocessing requirements.

How do you know if your data preprocessing is effective?

Effective data preprocessing can be measured through several key indicators: improved model performance metrics, reduced training time, decreased data quality issues in downstream processes, and enhanced reproducibility across different environments. Implementing automated quality monitoring, drift detection, and comprehensive testing frameworks helps validate preprocessing effectiveness and ensures consistent results over time.

What's the difference between data cleaning and data preprocessing?

Data cleaning focuses specifically on identifying and correcting errors, inconsistencies, and quality issues in datasets, such as removing duplicates, fixing formatting errors, and handling missing values. Data preprocessing is a broader concept that encompasses data cleaning along with transformation, integration, reduction, and optimization activities that prepare data for specific analytical or machine-learning purposes.

Should data preprocessing be automated?

Data preprocessing should incorporate automation where possible to improve consistency, reduce manual effort, and enable scalable operations. However, complete automation isn't always appropriate—domain expertise and human judgment remain essential for handling complex data quality issues, validating transformation results, and ensuring business context is preserved. The optimal approach combines automated processing for routine operations with human oversight for complex decisions and quality validation.

How does cloud-based preprocessing compare to on-premises solutions?

Cloud-based preprocessing offers advantages in scalability, automatic updates, and reduced infrastructure management overhead, while on-premises solutions provide greater control over data sovereignty and security. Modern hybrid approaches, like those offered by platforms such as Airbyte, combine cloud-native efficiency with flexible deployment options that support both cloud and on-premises requirements based on specific organizational needs and compliance requirements.

.webp)